How rich is a galaxy cluster?

October 13, 2014 Leave a comment

Post by Tom Kitching

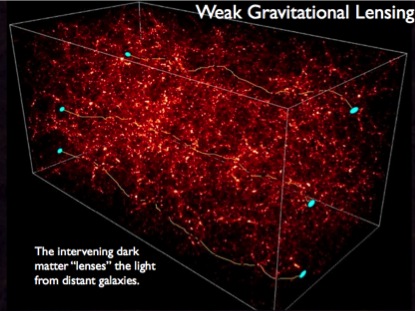

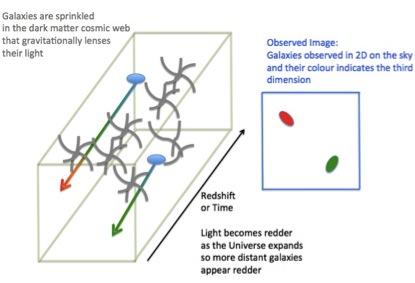

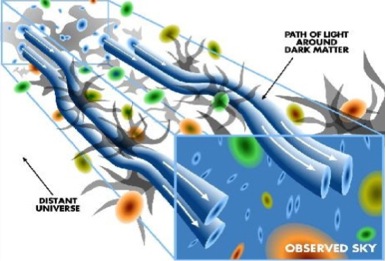

In a recent paper http://arxiv.org/abs/1409.3571 lead by Jes Ford http://www.phas.ubc.ca/~jesford/Welcome.html the mass-“richness” relation of galaxy clusters was investigating using data from the CFHTLenS survey.

A galaxy cluster, is a cluster of galaxies… Galaxies are swarms of stars held together in a common gravitational potential, in an analogous way galaxy clusters are swarms of galaxies held together in a larger gravitational potential structure.

“Richness” is a bit of astronomical jargon that refers to the number of bright galaxies in a cluster. A cluster is “rich” if it has many massive galaxies and not rich if there are no massive galaxies. In fact, in a way that sounds quite PC, a galaxy cluster is never referred to as “poor”, but some galaxies have “very low richness”. This is a term that was first defined in the 1960s

[the richness of a cluster] is defined to be the number of member galaxies brighter than absolute magnitude Mi ≥ −19.35, which is chosen to match the limiting magnitude at the furthest cluster redshift that we probe

The clusters were detected using a 3D matched filter method. This allowed for a very large number of clusters to be found. 18,056 cluster candidates were found in total, which allowed for the statistics of this population of clusters to be measured.

The total significance of the shear measurement behind the clusters amounts to 54σ. Which corresponds to a (frequentist) probability of 1-4.645×10-636 or a

99.99999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

9999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

9999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

9999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

9999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

9999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999999

99999999999999999%

chance that we have detected a weak lensing signal behind these clusters and groups!

The main result in the paper was the measurement that the mass of clusters increases with the richness with a relation of M200 = M0(N200/20)^β. This may be expected, that clusters that are more massive have more bright galaxies; after all a cluster is defined as a collection of galaxies. We found a normalization M0 ∼ (2.7+0.5) × 10^13 Solar Masses, and a logarithmic slope of β ∼ 1.4 ± 0.1.

Curiously no redshift dependence of the normalization was found. This suggests that there is a mechanism that regulates the number of bright galaxies in clusters that is not affected by the evolution of cluster properties over time. We do not know why this relationship should not change over time, or why it has the values it does, but we hope to find out soon.

Recent Comments